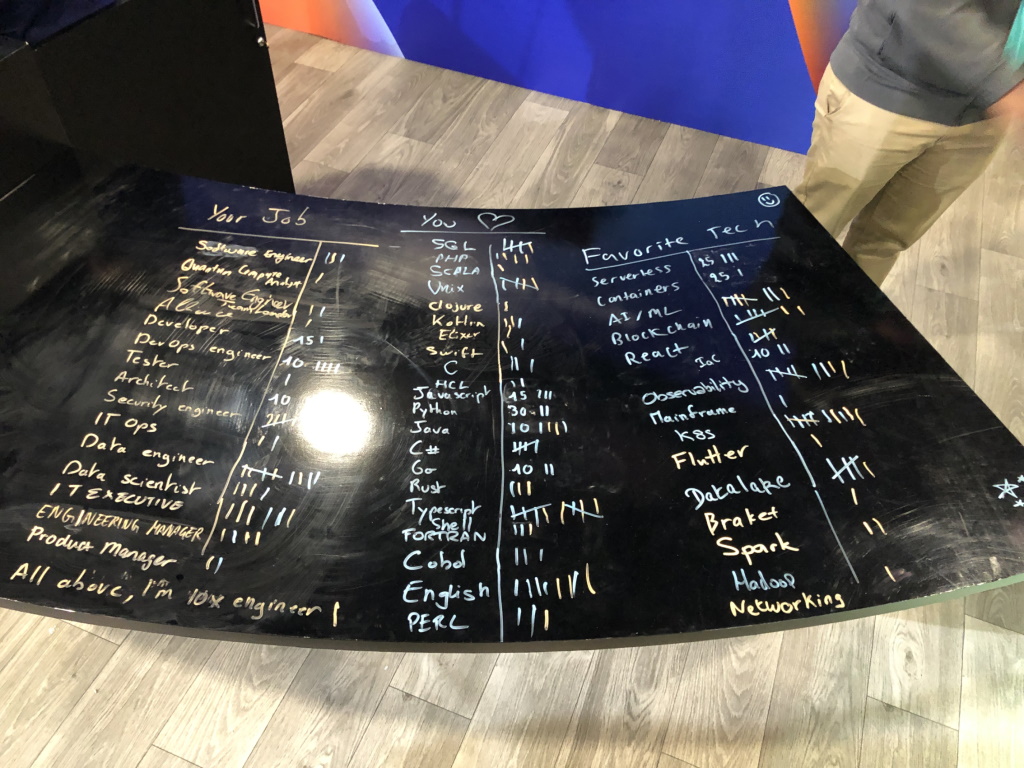

This story started back in December 2021 at the first post-COVID AWS re: Invent conference. We were staffed at the Welcome Desk of the AWS Developer Community Lounge. The first few minutes were awkward when people started to flow in. People were walking in front of the Welcome desk, and as soon as we made eye contact, they walked away in the opposite direction. An idea came over us; we were missing an icebreaker activity. We started mini-polls at the Welcome desk asking attendees about their favorite programming language, favorite swag, favorite AWS service, etc. Below is a picture of how we utilized the Welcome desk to poll attendees.

As we are always looking at improving our customers’ experience, we quickly imagined how we could enhance the setup. Questions like “What if people could vote on touch screens and see poll results in real-time” popped up. We ended up with this mock-up of the vision we had.

As we are passionate tech people, the gap between an idea and its implementation can be filled pretty easily. It is just a matter of time and motivation. We wrote down a list of requirements on a whiteboard and a first general design. So, what are those requirements? First, there is the risk of unreliable internet connectivity, as usual in conferences and expo halls. On the bright side, we don’t want to build custom touch screens for this application as iOS, Android, or Windows tablets are suitable for this purpose. To add on, we anticipate using the application on smartphones. We are a team of four AWS Developer Advocates contributing to building this application, among many other activities. Thus, we can’t afford to build multiple versions of the application for all the operating systems we want to support. We also can’t afford to spend time operating our backend infrastructure. It has to be simple yet secure and reliable. As the application is used only during in-person events, our backend infrastructure must also run at a minimal cost. The poll results on the dashboard must update in real-time and render on standard TVs or monitors. Finally, we also have to compose the product with our developer skills; we are all C# developers.

Let’s summarize our requirements:

- Immunize to unreliable internet connectivity.

- Frontend application is available on iOS, Android, and Windows, on tablets and smartphones.

- Seasonal use

- Minimal backend infrastructure operation overhead.

- Minimal backend infrastructure cost.

- Secure

- Reliable

- Near real-time dashboard update.

- Render dashboard on standard TVs and monitors.

- C# / .NET skills

Based on this list, we have designed the application architecture that I detail in the next sections.

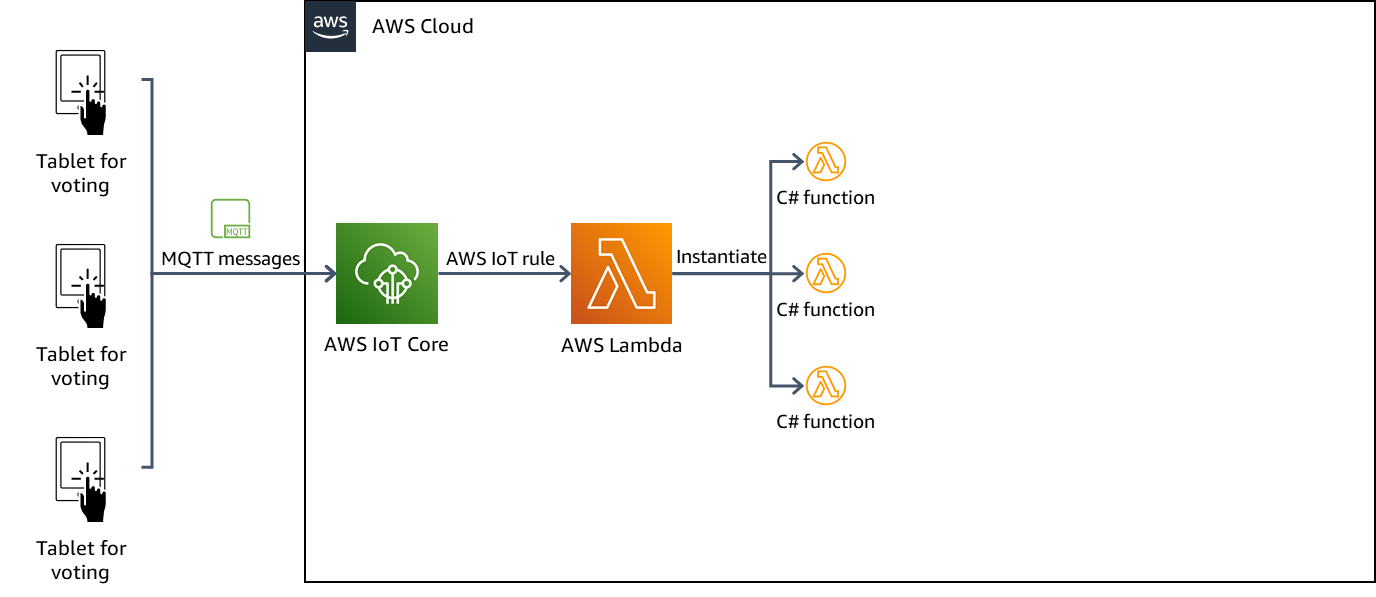

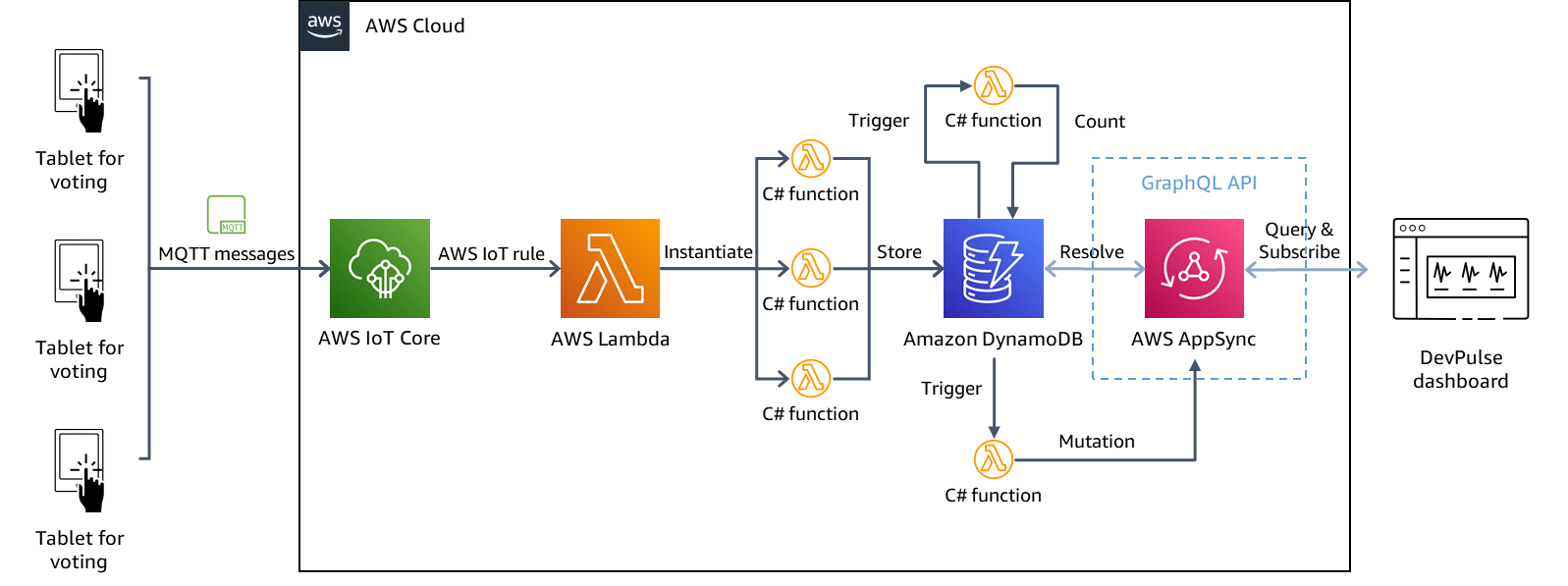

Application General Architecture

Let’s start going through the application architecture from the attendee’s perspective. Attendees vote on tablets. To deal with unreliable internet connectivity, we use the MQTT protocol to send messages to our backend rather than synchronous API calls. Each vote is sent as a MQTT message. If the poll has multiple questions, each answer is an independent vote and thus an independent message. When the application has no connectivity, it buffers the message locally until network connectivity is back. We use AWS IoT Core as our message broker. It enables us to connect our tablets easily and reliably without provisioning or managing servers. It has built-in support for the MQTT protocol and offers device authentication through certificates and end-to-end encryption to secure communication. From a cost perspective, it costs $1 per million MQTT messages in the US East (Ohio) region on 7/7/2023, up to 1 billion messages. In our case, this is cost-effective. Other costs apply as Connectivity costs. You can find the pricing details here. The device authentication feature through certificates is important as we don’t want to collect any PII data. We authenticate the tablets and not the users who are answering the polls. The application is used at in-person events. We have staff at the booth to ensure people use the application properly, and we trust people not to vote multiple times.

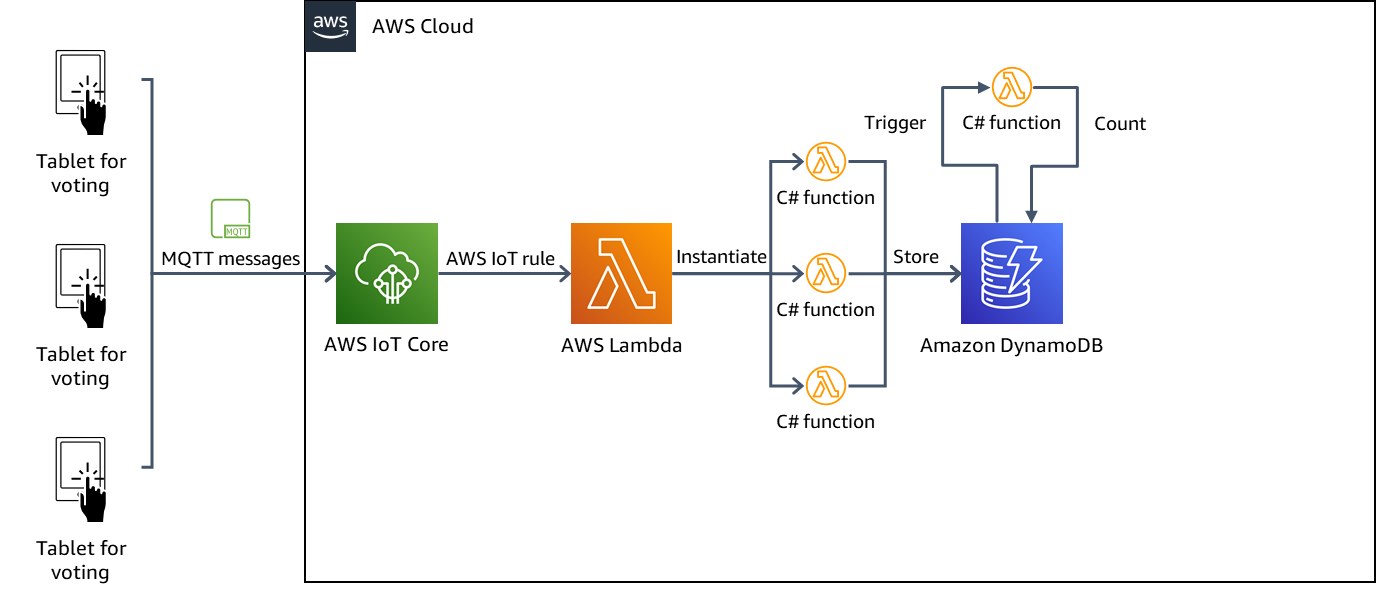

On the backend side, we set up an AWS IoT rule. It defines what to do when new messages reach AWS IoT Core. In our use case, each message represents a vote. Rather than updating the total number of an answer to a question of the poll, we prefer to store each vote individually in a database. It allows us to compute other metrics after the fact. There are AWS IoT rule actions that store each message directly in a DynamoDB table without even writing a single line of code. For example, the DynamoDBv2 action stores each attribute in the message payload as a separate column in the DynamoDB table. In our case, it does not fit our needs because we must implement a specific model to apply the aggregation pattern. I’ll come back to this in the next paragraph. We have then decided to use the Lambda action. This action invokes an AWS Lambda function, passing in the MQTT message. AWS Lambda is a serverless, event-driven computing service. You can run code for virtually any type of application or backend service without provisioning or managing servers (while they still exist). Based on the number of messages in the queue, AWS Lambda can instantiate multiple functions and later downscale to 0.

Our Lambda function simply stores the received MQTT messages in a DynamoDB table. Amazon DynamoDB is a fully managed, serverless, key-value NoSQL database designed to run high-performance applications at any scale. As we want to maintain near real-time aggregation metrics, such as the total number of votes for an answer to a question, we use the Amazon DynamoDB stream feature and a global secondary index, as advised in the documentation. DynamoDB stream is a feature that captures a time-ordered sequence of item-level modifications and stores this information in a log for up to 24 hours. The cool thing is that DynamoDB is integrated with Lambda so that we can trigger a lambda function to respond to events in the DynamoDB stream.

What does it mean for us? We leverage this capability so that each time we store a new vote in the table, it triggers another C# Lambda function. This second Lambda function updates the total number of votes for an answer to a question. Our table primary key is a composed key using the question as the partition key plus a sort key which is either a vote Id or an answer to the question. This primary key is optimized to store our items. To query the total number of votes for answers to questions, we query the global secondary index of our table. Its primary key is composed of the “answer” attribute as its partition key and the “count” attribute as its sort key. When we scan the global secondary index, it then returns only items with a “count” attribute. Thus, we only get our aggregated values, the total number of votes per answer for each question.

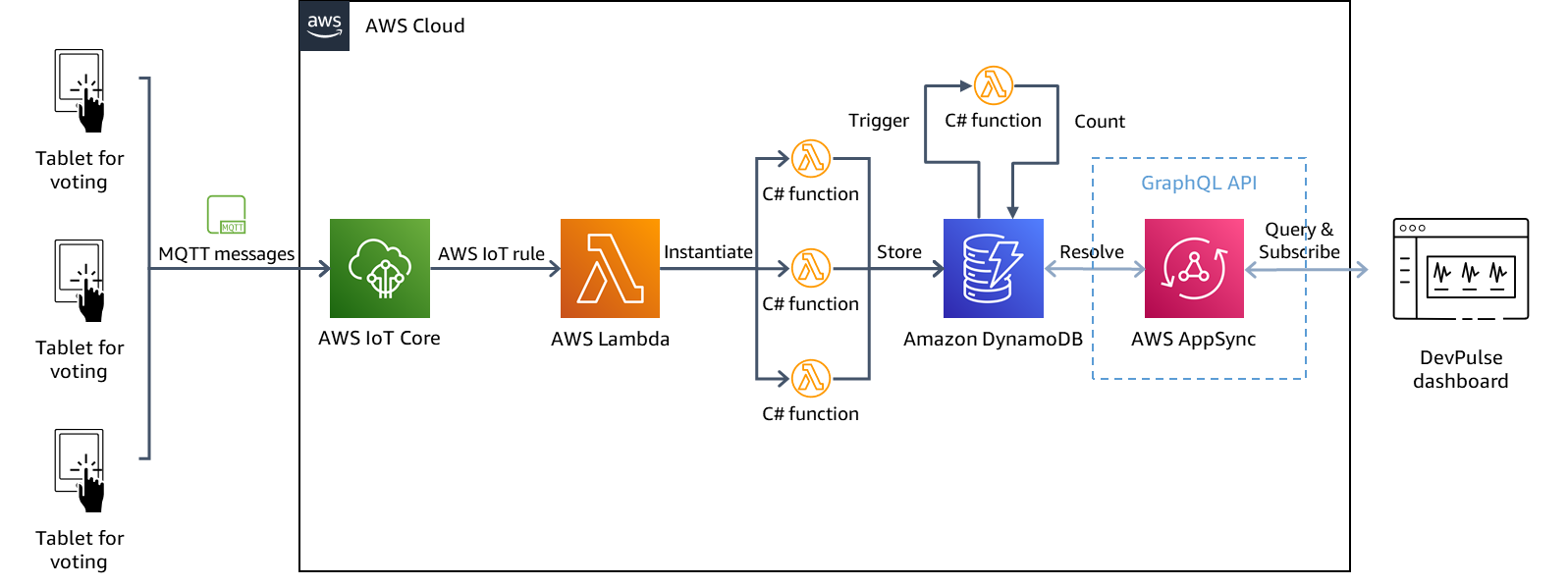

We expose those data through a GraphQL API hosted by AWS AppSync. Our dashboard queries the data when it loads the first time. Amazon DynamoDB is one of the data sources directly available in AppSync. We use the Apache Velocity Template Language for writing our Amazon DynamoDB resolver. A resolver defines how AppSync queries the data from the data source (here, DynamoDB) and maps the results to the GraphQL response schema.

But how does AppSync know about the data being updated? Again, we use a C# Lambda function triggered by the DynamoDB stream. We filter only item change logs related to our aggregated values to trigger the Lambda function. Then it calls the GraphQL mutation operation. This operation is an empty operation. It doesn’t really update the data but serves to trigger data update messages that flow through the subscription operation up to the dashboard.

Here we are. This is our general application architecture. Using the MQTT protocol and buffering locally the votes, we can deal with unreliable internet connectivity. Using only serverless services that scale up automatically and scale then down to 0, we are ready for seasonal use. It also minimizes infrastructure management tasks and infrastructure costs. Those services are reliable and secure by design, and we apply security best practices to our own code, such as using least-privilege access between services or device-based authentication through certificates.

.NET Implementation

You may wonder why C# and .NET implement this application. The answer is quite straightforward: because of the skills of the team and because we love C# and .NET. I’m a 19-year+ .NET developer, and my teammates are also seasoned .NET developers. .NET is a vibrant and modern development platform. It has reborn and embraced the future when it decided to rewrite the runtime as an open-source and cross-platform runtime. Given this, why would we have sacrificed our productivity to another development stack?

Cross-Platform Frontend Application With .NET MAUI

To build our cross-platform frontend application, we have decided to use .NET MAUI. It enables us to have one codebase while targeting multiple platforms. It is faster than its ancestor, Xamarin. I will not go deep into .NET MAUI here. You can find many resources on the internet to guide you through. Our main recommendation is to always use the .NET MAUI Community Toolkit. It adds so much value on top of .NET MAUI with a collection of reusable elements, including animations, behaviors, converters, effects, and helpers. To deal with MQTT from our client application, we use the MQTTNet library. It is a battle-tested MQTT library supported by the .NET Foundation with 7.7M+ downloads on NuGet.

Serverless Backend

For our serverless backend, we have built our C# Lambda functions using the .NET 6 Lambda runtime. When our C# Lambda function, which stores the vote, is triggered by the AWS IoT rule, the Lambda runtime passes in the MQTT message as a JSON object. In this case, you can simply deserialize this JSON object as a System.Text.Json.Nodes.JsonObject. Deserialization and serialization of input parameters and results are set easily thanks to the LambdaSerializer attribute provided by Amazon.Lambda.Core NuGet package. The library provides a default serializer class, DefaultLambdaJsonSerializer. Our C# Lambda function code looks like this:

using Amazon.DynamoDBv2.DataModel;

using Amazon.Lambda.Core;

using System.Text.Json.Nodes; // Assembly attribute to enable the Lambda function's JSON input to be converted into a .NET class.

[assembly: LambdaSerializer(typeof(Amazon.Lambda.Serialization.SystemTextJson.DefaultLambdaJsonSerializer))] namespace DevPulse.BackendProcessing.VoteStorage; public class Function

{ private AmazonDynamoDBClient client; private DynamoDBContext dbcontext; public Function() { this.client = new(); this.dbcontext = new(this.client); } public async Task FunctionHandler(JsonObject jsonObject, ILambdaContext context) { LambdaLogger.Log("BEGIN: Event received "); try { var guid = Guid.NewGuid().ToString(); Vote vote = new () { Id = guid, Answer = (string)jsonObject["Answer"], Question = (string)jsonObject["Question"], CorrelationId = (string)jsonObject["GUID"], Timestamp = DateTime.Now }; await dbcontext.SaveAsync(vote); LambdaLogger.Log("ACCEPTED: Event stored"); return true; } catch (Exception e) { LambdaLogger.Log("EXCEPTION: " + e.Message); } return false; }

}” data-lang=”text/x-csharp”>

using Amazon.DynamoDBv2;

using Amazon.DynamoDBv2.DataModel;

using Amazon.Lambda.Core;

using System.Text.Json.Nodes; // Assembly attribute to enable the Lambda function's JSON input to be converted into a .NET class.

[assembly: LambdaSerializer(typeof(Amazon.Lambda.Serialization.SystemTextJson.DefaultLambdaJsonSerializer))] namespace DevPulse.BackendProcessing.VoteStorage; public class Function

{ private AmazonDynamoDBClient client; private DynamoDBContext dbcontext; public Function() { this.client = new(); this.dbcontext = new(this.client); } public async Task<bool> FunctionHandler(JsonObject jsonObject, ILambdaContext context) { LambdaLogger.Log("BEGIN: Event received "); try { var guid = Guid.NewGuid().ToString(); Vote vote = new () { Id = guid, Answer = (string)jsonObject["Answer"], Question = (string)jsonObject["Question"], CorrelationId = (string)jsonObject["GUID"], Timestamp = DateTime.Now }; await dbcontext.SaveAsync(vote); LambdaLogger.Log("ACCEPTED: Event stored"); return true; } catch (Exception e) { LambdaLogger.Log("EXCEPTION: " + e.Message); } return false; }

}You can find several NuGet packages that offer plain old C# objects to serialize and deserialize the input parameters depending on the type of event triggering your C# Lambda function. For example, our C# Lambda function triggered by the Amazon DynamoDB stream event uses Amazon.Lambda.DynamoDBEvents package. Our code looks like this:

using Amazon.DynamoDBv2;

using Amazon.DynamoDBv2.DataModel;

using Amazon.Lambda.Core;

using Amazon.Lambda.DynamoDBEvents; // Assembly attribute to enable the Lambda function's JSON input to be converted into a .NET class.

[assembly: LambdaSerializer(typeof(Amazon.Lambda.Serialization.SystemTextJson.DefaultLambdaJsonSerializer))] namespace DevPulse.BackendProcessing.AggregateVote; public class Function

{ private AmazonDynamoDBClient client; private DynamoDBContext dbcontext; public Function() { this.client = new(); this.dbcontext = new(this.client); } public async Task FunctionHandler(DynamoDBEvent evt, ILambdaContext context) { foreach (var record in evt.Records) { await ProcessRecord(record); } } private async Task ProcessRecord(DynamoDBEvent.DynamodbStreamRecord record) { /// process the record }

}It leverages the DynamoDBEvent and DynamodbStreamRecord POCO objects, and we don’t have to deal with the deserialization and serialization of the Lambda parameters.

Live Dashboard

Our live dashboard is a Blazor WebAssembly application. As we don’t have real JavaScript skills, it enables us to build a browser-side interactive application with Razor markup and C# and yet rely on an open web standard: WebAssembly. WebAssembly is a binary instruction format for a stack-based virtual machine. It is designed as a portable compilation target for programming languages, enabling deployment on the web for client and server applications. It is part of the open web platform and is in a W3C Community Group and a W3C Working Group. Blazor WebAssembly is a single-page application framework leveraging WebAssembly to run .NET code inside web browsers.

We use AWS AppSync to define and host our GraphQL API. To query this API from our Blazor WebAssembly application, we use Strawberry Shake, a .NET client library for GraphQL API. Calling query and mutation GraphQL operation from Strawberry Shake is straightforward. When it comes to subscribing to our subscription operation, we had to extend the Strawberry Shake default implementation. AWS AppSync uses a different WebSocket message flow. We have built our own ISocketProtocol implementation fulfilling the AppSync WebSocket message flow.

As our Blazor WebAssembly application is a static single-page application like an Angular, React, or Vue.js application, we use Amazon S3 and Amazon CloudFront to host and deliver it. Amazon S3 is an object storage service. Amazon CloudFront is a content delivery network (CDN) service built for high performance, security, and developer convenience. Used together, we host and deliver easily our application fulfilling our low cost and low operation requirements. We follow very similar steps to those detailed in this prescriptive guidance. You can simplify and replace React with Blazor WebAssembly.

Conclusion

We had a lot of fun building this application. It helped us to start a lot of insightful conversations at the Developer lounge during re: Invent 2022. Two things I would like to like to highlight. From a .NET standpoint, there is nothing specific about using AWS. We just use the provided package to use the services we need as we would do with any other service provider. From an AWS standpoint, there is nothing specific about building a .NET application. Our architecture is quite a standard architecture. For example, back in 2019, David Moser was writing this post about a similar architecture for monitoring IoT devices in real-time with AWS AppSync.

So if you are a .NET developer looking at using cloud services to build your next application, I encourage you to test and evaluate AWS. It will enable you to take an informed decision rather than defaulting to the go-to solution.