It’s re:invent season already, and we had exciting news to announce with Amazon this year. With all these remote sessions, what’s better than a quick lab to play with the new stuff? It’s starting to feel like Christmas already!

We’re going to kill two birds with one stone (just an idiom, keep reading) and experiment with two of our latest announcements. First on the list is the “Install Amazon EKS Distro anywhere” with the EKS Snap, a frictionless way to try all the EKS-D experience in a snap. Second is the LTS Docker Image Portfolio of secure container images from Canonical, available on Amazon ECR Public.

This blog will be a good starting point to try these new AWS services with open-source technology.

Why opting for LTS Docker Images?

“Who needs to run one container for ten – even five – years?” you may ask. And that would be a fair wonder.

LTS stands for “Long Term Support.” The Ubuntu distro made the acronym famous a few years ago, giving 5-year security updates every two years, for free. Since then, Canonical also offered the Extended Security Maintenance (ESM), an additional five years of support. With the LTS Docker Image Portfolio, Canonical extends this 10-year commitment to some applications on top of Ubuntu container images.

Why opting for LTS Docker Images, when agility runs the world? The reality is that enterprises, mainly where there are intricate software pieces, cannot keep up with the development pace. At particular locations, such as the edge of the network, or in some critical use cases, production workloads won’t receive new versions with potentially breaking changes and are limited to receiving security updates only. Recent publications showed that vulnerabilities in containers are a reality. Keeping up with the pace of upstream applications isn’t always possible (this article from DarkReading takes image analysis on medical devices as an example). Canonical’s LTS images ensure your pipelines won’t break every two days, giving you time to develop at your pace and focus on your core features.

Getting started

Here I will show you how to create an Amazon EKS cluster on your computer or server, on which we will deploy a sample LTS NGINX docker image. You will need a machine that can run snaps (Ubuntu already ships with it). Also, make sure you remove MicroK8s if you have installed it, because it would conflict with the EKS snap.

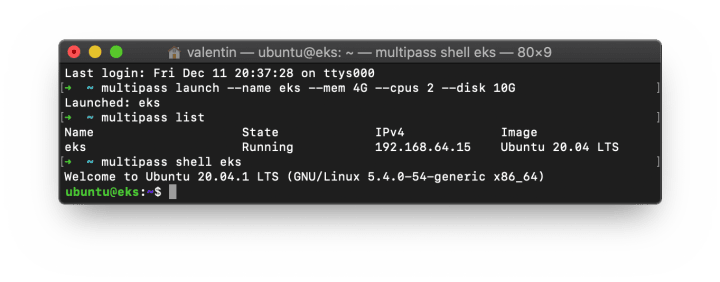

I use Multipass to get a clean Ubuntu VM; I recommend it for this Lab.

Amazon EKS Distro (EKS-D) comes in a snap called “EKS” – its documentation is on snapcraft.io/eks. Let’s snap install it! At the time of writing, the EKS snap is available in the edge (development) channel and without strict confinement (classic).

sudo snap install eks --classic --edgeOnce the EKS snap is installed, we will add our user to the “eks” group (to run commands without sudo), give them permissions on the kubelet config folder, reload the session (to make the changes effective) and create an alias (to make our lives easier).

sudo usermod -a -G eks $USER

sudo chown -f -R $USER ~/.kube

sudo su - $USER

sudo snap alias eks.kubectl kubectl

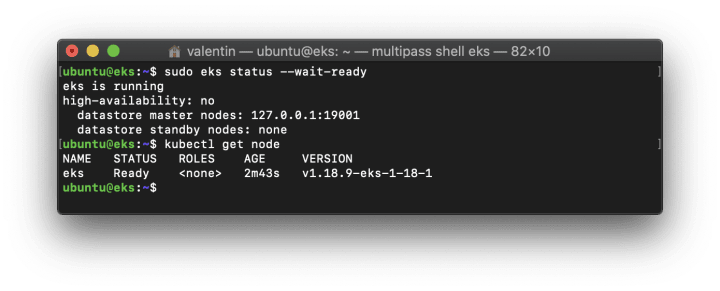

sudo eks status --wait-readyYou can already communicate with your cluster. Run kubectl get node, you will see information about your node running EKS-D:

Hurray, you’ve successfully created a Kubernetes cluster using Amazon EKS Distro. ?

Deploy an LTS NGINX using EKS-D

We will now deploy an NGINX server from Canonical’s maintained repository on Amazon ECR Public. Let’s use the public.ecr.aws/lts/nginx:1.18-20.04_beta image. It guarantees a secure, fully maintained, no higher risk than beta, NGINX 1.18 server on top of the Ubuntu 20.04 LTS image.

First, use the following command to create an index.html that we will later fetch from a browser.

mkdir -p project

cat <<EOF >> ./project/index.html

<html> <head> <title>HW from EKS</title> </head> <body> <p>Hello world, this is NGINX on my EKS cluster!</p> <img src="https://http.cat/200" /> </body>

</html>

EOFThen, create your deployment configuration, nginx-deployment.yml, with the following content.

# nginx-deployment.yml

apiVersion: apps/v1

kind: Deployment

metadata: name: nginx-deployment

spec: replicas: 1 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: public.ecr.aws/lts/nginx:1.18-20.04_beta volumeMounts: - name: nginx-config-volume mountPath: /var/www/html/index.html subPath: index.html volumes: - name: nginx-config-volume configMap: name: nginx items: - key: nginx-site path: index.htmlWe’re telling EKS to create a deployment made of one pod with one container running NGINX and mapping our local index.html file through a configMap. Let’s first create the said configMap, and apply our deployment:

kubectl create configmap nginx --from-file=nginx-site=./project/index.html

kubectl apply -f nginx-deployment.yml

watch kubectl get pods

That’s it, you could let it run for ten years!

Jokes aside, using an LTS image as part of your CI/CD pipeline means freeing your app from upstream changes, without compromising security, all thanks to containers.

Expose and access your website

Let’s edit our deployment to access our website while implementing a few best practices.

Limit pod resources

Malicious attacks are often the result of a combination of cluster misconfiguration and container vulnerabilities. This cocktail is never good. To prevent attackers from destroying your whole cluster by attacking only your NGINX pod, we’re going to set resource limits.

Edit your nginx-deployment.yml file to add the following resources section:

# nginx-deployment.yml - skipped [...] some parts to save space

[...] containers: - name: nginx [...] resources: requests: memory: "30Mi" cpu: "100m" limits: memory: "100Mi" cpu: "500m" [...]Run one more kubectl apply -f nginx-deployment.yml to update your configuration.

Create a service to expose Nginx

Keep reading, we’re so close to the goal! Let’s make this web page reachable from outside the cluster.

Create a nginx-service.yml file with the following content:

# nginx-service.yml

apiVersion: v1

kind: Service

metadata: name: nginx-service

spec: type: NodePort selector: app: nginx ports: - protocol: TCP port: 80 targetPort: 80 nodePort: 31080 name: nginxOne more apply, and voilà!

$ kubectl apply -f nginx-service.yml

service/nginx-service created

$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-service NodePort 10.152.183.242 <none> 80:31080/TCP 4s

Note: 192.168.64.15 is my Multipass VM – my EKS node – IP (look at the first screenshot!)

What’s next?

We installed Amazon EKS Distro, using the EKS snap. We then deployed an LTS NGINX server with EKS-D. All this on any machine where you can use snap… in other words, any Linux. The Amazon EKS anywhere experience has never been simpler!

Next, you could use Juju to manage your applications on both public clouds and edge devices running MicroK8s or EKS-D. Or you could simply start by adding a few more pods to your cluster, using Canonical’s LTS Docker Image Portfolio from Amazon ECR Public.

- https://ubuntu.com/blog/canonical-publishes-lts-docker-image-portfolio-on-docker-hub

- https://ubuntu.com/blog/canonical-lts-docker-image-portfolio-on-amazon-ecr-public

- https://ubuntu.com/blog/install-amazon-eks-distro-anywhere

- https://snapcraft.io/eks/

- https://ubuntu.com/security/docker-images