Let’s get into it right away.

A few weeks ago the 90 days account expiry from vRealize Automation 8.0 and 8.0.1 GA releases has been exceeded for both the Postgres and Orchestrator services which runs today as Kubernetes pods.

This issue is resolved in vRealize Automation 8.1 which is soon to be released as of the writing of this post. ( Generally available in 1H20 ).

This issue is also resolved in Cumulative Update for vRealize Automation 8.0.1 HF1/HF2 so if you already installed the HF1 patch a while ago and before the account expiry, you have nothing to worry about.

But what about existing deployments that was not updated with HF1 or HF2 as of yet or net new deployments of vRealize Automation 8.0/8.0.1 and how they may be impacted by this issue. In this blog I address those scenarios in terms of what needs to be done to continue benefiting from everything the automation solution have to offer today and/or have a successful deployment when you do choose to deploy the vRealize Automation 8.0.1 solution until vRealize Automation 8.1 is released then you really don’t have to worry about any of this.

So let’s get started eh!.

Existing Deployments

For existing vRA 8.0 or 8.0.1 customers with active working instances, you have two options before you can reboot the appliance or restart the vRA services:

Option 1

Apply the workaround mentioned in KB 78235 and stay at vRA 8.0.1.

Scenario 1 : vRealize Automation 8.0/8.0.1 is up and running

- SSH into each of the nodes

- Execute

vracli cluster exec -- bash -c 'echo -e "FROM vco_private:latestnRUN sed -i s/root:.*/root:x:18135:0:99999:7:::/g /etc/shadownRUN sed -i s/vco:.*/vco:x:18135:0:99999:7:::/g /etc/shadow" | docker build - -t vco_private:latest' - Execute

vracli cluster exec -- bash -c 'echo -e "FROM db-image_private:latestnRUN sed -i s/root:.*/root:x:18135:0:99999:7:::/g /etc/shadownRUN sed -i s/postgres:.*/postgres:x:18135:0:99999:7:::/g /etc/shadow" | docker build - -t db-image_private:latest' - Execute

opt/scripts/backup_docker_images.shto persist the new changes through reboots.

Scenario 2 : vRealize Automation 8.0/8.0.1 is already down as a result.

- SSH into each of the nodes

- Run

opt/scripts/deploy.sh --onlyCleanon a single vRA node to shutdown the services safely. - Once completed, Repeat step 2 through 4 in Option 1 – > Scenario 1

- Run

/opt/scripts/deploy.shto start the services up.

Option 2

Apply the vRealize Automation 8.0.1 HF1 or HF2 with vRealize Lifecycle Manager 8.0.1 patch 1

Scenario 1 : vRealize Automation 8.0/8.0.1 is up and running

It is recommended to install vRealize Suite Lifecycle Manager 8.0.1 patch 1 before vRealize Automation 8.0.1 patch 1. The vRealize Suite Lifecycle Manager 8.0.1 Patch 1 contains a fix for some intermittent delays in submitting the patch request.

Apply vRealize Automation 8.0.1 patch 1 leveraging vRealize Lifecycle manager 8.0.1 Patch 1.

Scenario 2 : vRealize Automation 8.0/8.0.1 is already down as a result.

- SSH into each of the nodes

- Run

/opt/scripts/deploy.sh --onlyCleanon a single vRA node to shutdown the services safely. - Once completed, Repeat step 2 through 4 in Option 1 – > Scenario 1

- Run

/opt/scripts/deploy.shto start the services back.Apply vRealize - Apply Automation 8.0.1 patch 1 leveraging vRealize Lifecycle manager 8.0.1 Patch 1

Note: We highly recommend to be always on the more recent builds and patches.

New Deployments

If you need a video tutorial on how to install vRealize Automation 8.x check either my Youtube video on how to deploy vRA 8.x with vRealize Easy Installer here or my previous blog post here which also include the video.

Please subscribe and smash that tiny notification bill to get notified of any new and upcoming videos if you do check my Youtube channel.

Now that is out of the way , for new deployments of 8.0.1 and until 8.1 is released where the issue is resolved, it is really very simple.

Once you see that vRA 8.0.1 is deployed via vRealize suite lifecycle manager 8.0.1 and that its now reachable via the network, do the following:

- SSH into the vRA node

- Execute

Kubectl get pods -n preludeto see if vRA started to deploy a few of the services in the prelude namespace. - Once confirmed proceed to step 4

- Execute

vracli cluster exec -- bash -c 'echo -e "FROM vco_private:latestnRUN sed -i s/root:.*/root:x:18135:0:99999:7:::/g /etc/shadownRUN sed -i s/vco:.*/vco:x:18135:0:99999:7:::/g /etc/shadow" | docker build - -t vco_private:latest' - Execute

vracli cluster exec -- bash -c 'echo -e "FROM db-image_private:latestnRUN sed -i s/root:.*/root:x:18135:0:99999:7:::/g /etc/shadownRUN sed -i s/postgres:.*/postgres:x:18135:0:99999:7:::/g /etc/shadow" | docker build - -t db-image_private:latest' - Execute

opt/scripts/backup_docker_images.shto persist the new changes through reboots. - Keep checking the status of the pods by continually running and executing

Kubectl get pods -n preludeuntil all the pods are up and running.

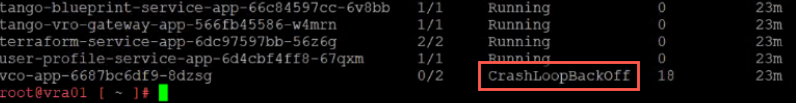

If your only installing one appliance and you noticed that the vco-app pod status is CrashLoopBackOff

You will need to delete the pod so a new one gets provisioned from the newly updated docker build that we generated in step 4 by executing the following below command.

kubectl delete pods -n prelude vco-app-pod-name

If your installing a cluster and since we can’t simply delete the postgres pod to fix it –So the other postgres instances on the remaining nodes are able to replicate data-otherwise other services that depends on postgres will also fail so its better to just shutdown all the services on each of the nodes and doing the following:

- SSH into the vRA node

- Execute

Kubectl get pods -n preludeto see if vRA started to deploy a few of the services in the prelude namespace. - Execute

/opt/scripts/deploy.sh --onlyCleanon each of the nodes to stop the services. - Once completed execute the workaround repeating step 4 through 6

- Run

/opt/scripts/deploy.shon each of the nodes to start the services up.

Once your appliance or cluster is up and running apply the vRealize Automation 8.0.1 HF1 or HF2. ( Soon to be also released ) as I mentioned above in Option 2 for Existing Deployment.

If you have already one appliance with HF1 you can’t scale out to create a cluster since the original image is not patched with HF1. So unfortunately you have to wait a couple more weeks until 8.1 is out, where then you can upgrade then scale out your deployment to create a cluster production ready deployment.

If you do have any questions please post them below. I will try my best to have them answered.

Hope this has been hopeful if you have made it to the end.

The End Eh!