Every few years, the Linux world finds something to fight about. Sometimes it is about package managers, sometimes about text editors, but nothing in recent memory split the community quite like systemd. What began as an init replacement quietly grew into a full-blown identity crisis for Linux itself, complete with technical manifestos, emotional arguments, and more mailing list drama than I ever thought possible.

I did not plan to take a side in that debate. Like most users, I just wanted my server to boot, my logs to make sense, and my scripts to run. Yet systemd had a way of showing up uninvited. It replaced the old startup scripts I had trusted for years and left me wondering whether the Linux I loved was changing into something else entirely.

Over time, I learned that this was not simply a story about software. It was a story about culture, trust, and how communities handle change. What follows is how I got pulled into that argument, what I learned when I finally stopped resisting. I have even created a course on using advanced automation with systemd. That much I use systemd now.

How I Got Pulled into the Systemd Debate

My introduction to systemd was not deliberate and arrived uninvited with an update, quietly replacing the familiar tangle of /etc/init.d scripts on Manjaro. The transition was abrupt enough to feel like a betrayal as one morning, my usual service apache2 restart returned a polite message: Use systemctl instead. That was the first sign that something fundamental had changed.

I remember the tone of Linux forums around that time was half technical and half existential. Lennart Poettering, systemd’s creator, had become a lightning rod for criticism. To some, he was the architect of a modern, unified boot system; to others, he was dismantling the very ethos that made Unix elegant. I was firmly in the second camp!

Back then, my world revolved around small workstations and scripts I could trace line by line. The startup process was tangible, you just had to open /etc/rc.local, add a command, and know it would run. When Fedora first adopted systemd in 2011, followed later by Debian, I watched from a distance with the comfort of someone on a “safe” distribution, but it was only a matter of time.

Ian Jackson of Debian called the decision to make systemd the default a failure of pluralism and Devuan was born soon after as a fork of Debian, built specifically to keep sysvinit alive. On the other side, Poettering argued that systemd was never meant to violate Unix principles, but to reinterpret them for modern systems where concurrency and dependency tracking actually mattered.

I followed those arguments closely, sometimes nodding along with Jackson’s insistence on modularity, other times feeling curious about Poettering’s idea of “a system that manages systems.” Linus Torvalds chimed in occasionally, not against systemd itself but against its developers’ attitudes, which kept the controversy alive far longer than it might have lasted.

At that point, I saw systemd as something that belonged to other distributions, an experiment that might stabilize one day but would never fit into the quiet predictability of my setups. That illusion lasted until I switched to a new version of Manjaro in 2013, and the first boot greeted me with the unmistakable parallel startup messages of systemd.

The Old World: init, Scripts, and Predictability

Before systemd, Linux startup was beautifully transparent. When the machine booted, you could almost watch it think. The bootloader passed control to the kernel, the kernel mounted the root filesystem, and init took over from there. It read a single configuration file, /etc/inittab, and decided which runlevel to enter.

Each runlevel had its own directory under /etc/rc.d/, filled with symbolic links to shell scripts. The naming convention S01network, S20syslog, K80apache2was primitive but logical. The S stood for “start,” K for “kill,” and the numbers determined order. The whole process was linear, predictable, and very, very readable.

If something failed, you could open the script and see exactly what went wrong, and debugging was often as simple as adding an echo statement or two. On a busy day, I would edit /etc/init.d/apache2, add logging to a temporary file, and restart the service manually.

A typical init script looked something like this:

#!/usr/bin/env sh

### BEGIN INIT INFO

# Provides: myapp

# Required-Start: $network

# Required-Stop:

# Default-Start: 2 3 4 5

# Default-Stop: 0 1 6

# Short-Description: Start myapp daemon

### END INIT INFO

case "$1" in

start)

echo "Starting myapp"

/usr/local/bin/myapp &

;;

stop)

echo "Stopping myapp"

killall myapp

;;

restart)

$0 stop

$0 start

;;

*)

echo "Usage: /etc/init.d/myapp {start|stop|restart}"

exit 1

esac

exit 0Crude, yes, but understandable. Even if you did not write it, you could trace what happened as it was pure shell, running in sequence, and every decision it made was visible to you.

This simplicity was based on the Unix principle of small, composable parts. You could swap out one script without affecting the rest and even bypass init entirely by editing /etc/rc.local, placing commands there for one-off startup tasks.

The problem, however, was because everything ran in a fixed order, startup could be painfully slow in comparison to systemd. If a single service stalled, the rest of the boot process might hang and dependencies were implied rather than declared. I could declare that a service “required networking,” but the system had no reliable way to verify that the network was fully up.

Also, parallel startup was almost if not completely impossible. Distributions like Ubuntu experimented with alternatives such as Upstart, which tried to make init event driven rather than sequential, but it never fully replaced the traditional init scripts.

When systemd appeared, it looked like another attempt in the same category, a modernization effort destined to break the things I liked most. From my perspective, init was not broken. It was slow, yes, but there was total control, which I did not like getting replaced by a binary I could not open in a text editor.

The early systemd adopters claimed that it was faster, cleaner, and more consistent, but the skeptics (which included me) saw it as a power grab by Red Hat. The subsequent years have proven this fear somewhat justified, as maintaining non-systemd configurations has become increasingly difficult but the standardization has also made Linux more predictable across distributions.

Looking back, I now realize that I had mistaken predictability for transparency. The old init world felt clear because it was simple, not because it was necessarily better designed. As systems grew more complex with services that had to interact asynchronously, containers starting in milliseconds, and dependency chains that spanned multiple layers, the cracks began to show.

What Systemd Tried to Fix

It took me a while to understand that systemd was not an act of defiance against the Unix philosophy but a response to a real set of technical problems. Once I started reading Lennart Poettering’s early blog posts, a clearer picture emerged. His goal was not to replace init for its own sake but to make Linux startup faster, more reliable, and more predictable.

Poettering and Kay Sievers began designing systemd in 2010 at Red Hat, with Fedora as its first proving ground (now my daily driver). Their idea was to build a parallelized, dependency-aware init system that could handle modern workloads gracefully. Instead of waiting for one service to finish before starting the next, systemd would launch multiple services concurrently based on declared dependencies.

At its heart, systemd introduced unit files, small declarative configurations that replaced the hand-written shell scripts of SysV init. Each unit described a service, socket, target, or timer in a consistent, machine-readable format. The structure was both simple and powerful:

[Unit]

Description=My Custom Service

After=network.target

[Service]

ExecStart=/usr/local/bin/myapp --config /etc/myapp.conf

Restart=on-failure

User=myuser

[Install]

WantedBy=multi-user.targetTo start it, you no longer edited symlinks or runlevels. You simply ran:

sudo systemctl start myapp.service

sudo systemctl enable myapp.serviceThe old init scripts had no formal way to express “start only after this other service has finished successfully.” They relied on arbitrary numbering and human intuition. With systemd, relationships were declared using After=, Before=, Requires=, and Wants=.

[Unit]

Description=Web Application

After=network.target database.service

Requires=database.serviceThis meant systemd could construct a dependency graph at boot and launch services in optimal order, improving startup times dramatically.

Systemd also integrated timers (replacing cron for system tasks), socket activation (starting services only when needed), and cgroup management (to isolate processes cleanly). The critics called it “scope creep,” but Poettering’s argument was that the components it replaced were fragmented and poorly integrated, and building them into a single framework reduced complexity overall. That claim divided the Linux world!

On one side were distributions like Fedora, Arch, and openSUSE, which adopted systemd quickly. They saw its promise in boot speed, unified tooling, and clear dependency tracking. On the other side stood Debian and its derivatives, which valued independence and simplicity (some of you new folks might find it odd given their current Rust adoption). Debian’s Technical Committee vote in 2014 was one of the most contentious in its history. When systemd was chosen as the default, Ian Jackson resigned from the committee, citing an erosion of choice and the difficulty of maintaining alternative inits.

That decision directly led to the birth of Devuan whose developers described systemd as “an intrusive monolith,” a phrase that captured the mood of the opposition.

Yet, beneath the politics, systemd was solving problems that were not just theoretical. Race conditions during boot were common and service dependencies often failed silently. On embedded devices and containerized systems, startup order mattered in ways SysV init could not reliably enforce.

The Real Arguments (and What They Miss)

The longer I followed the systemd controversy, the more I realized that the arguments around it were not always about code and were also about identity. Every debate thread eventually drifted toward the same philosophical divide that should Linux remain a collection of loosely coupled programs, or should it evolve into a cohesive, centrally managed system?

When I read early objections from Slackware and Debian maintainers, they were rarely technical complaints about bugs or performance. They were about trust and the Unix philosophy “do one thing well” that had guided decades of design. init was primitive but modular, and its limitations could be fixed piecemeal. systemd, by contrast, felt like a comprehensive replacement that tied everything together under a single logic (the current debate around C being memory unsafe and Rust adoption are quite similar in the form).

Devuan’s founders said that once core packages like GNOME began depending on systemd-logind, users effectively lost the ability to choose another init system. That interdependence was viewed as a form of lock-in at the architecture level.

Meanwhile, Lennart Poettering maintained that systemd was modular internally, just not in the fragmented Unix sense. He described systemd as an effort to build coherence into an environment that had historically resisted it.

I remember reading Linus’ comments on the matter around 2014. He was not against systemd per se but his frustration (and it has not changed much) was about developer behavior which called out what he saw as unnecessary hostility from both sides, maintainers blocking patches, developers refusing to accommodate non-systemd setups, and the cultural rigidity that had turned a design debate into a purity contest. His opinion was pragmatic that as long as systemd worked well and did not break things needlessly, it was fine.

The irony was that both camps were right in their own way. The anti-systemd camp correctly foresaw that once GNOME and major distributions adopted it, alternatives would fade and the pro-systemd side correctly argued that modern systems needed unified control and reliable dependency management.

As someone who would later get into Sysadmin and DevOps, now I feel like the conversation missed the fact that Linux itself had changed. By the early 2010s, most servers were running dozens of services, containers were replacing bare-metal deployments, and hardware initialization had become vastly more complex. Boot time was no longer the slow, linear dance it used to be and was a more of a network of parallelized events that had to interact safely and recover from failure automatically.

I once tried to build a stripped-down Debian container without systemd, thinking I could recreate the old init world but it was an enlightening failure. I spent hours wiring together shell scripts and custom supervision loops, all to mimic what a single Restart=on-failure directive did automatically in systemd.

That experience showed me what most arguments missed that the problem was not that systemd did too much, but that the old approach expected the user to do everything manually.

For instance, consider a classic SysV approach to restarting a service on crash. You would write something like this:

#!/bin/sh

while true; do

/usr/local/bin/myapp

status=$?

if [ $status -ne 0 ]; then

echo "myapp crashed with status $status, restarting..." >&2

sleep 2

else

break

fi

doneIt worked, but it was a hack. systemd gave you the same reliability with a single configuration line:

Restart=on-failure

RestartSec=2The simplicity of that design was hard to deny. Yet to many administrators, it still felt like losing both control and familiarity. The cultural resistance was amplified by how fast systemd grew. Each release seemed to absorb another subsystem: udevd, logind, networkd, and later resolved. Critics accused it of “taking over userland” but the more I examined those claims, the more I saw a different pattern.

Each of those tools replaced a historically fragile or inconsistent component that distributions had struggled to maintain separately but the cultural resistance was amplified by how fast systemd grew. Critics also pointed to the technical risk of consolidating so much functionality in one project, fearing a single regression could break the entire ecosystem. The defensive tone of Poettering’s posts did not help, and over time, his name became synonymous with the debate itself.

But even among the loudest critics, there was a reluctant recognition that systemd had improved startup speed, service supervision, and logging consistency and what they feared was not its functionality but its dominance.

The most productive discussions I saw were not about whether systemd was “good” or “bad,” but about whether Linux had space for diversity anymore. In a sense, systemd’s arrival forced the community to confront its own maturity. You could no longer treat Linux as a loose federation of components; it had become a unified operating system in practice, even if the philosophy still insisted otherwise.

By the time I reached that conclusion, the debate had already cooled. Most distributions had adopted systemd by default, Devuan had carved out its niche, and the rest of us were learning to live with the new landscape. I began to see that the real question was not whether systemd broke the Unix philosophy, but whether the old Unix philosophy could still sustain the complexity of modern systems.

What I Learned After Actually Using It

At some point, resistance gave way to necessity. As often happens, I started managing servers (Cent OS) that already used systemd, so learning it was no longer optional. What surprised me most was how quickly frustration turned into familiarity once I stopped fighting it. The commands that had felt alien at firs began to make sense.

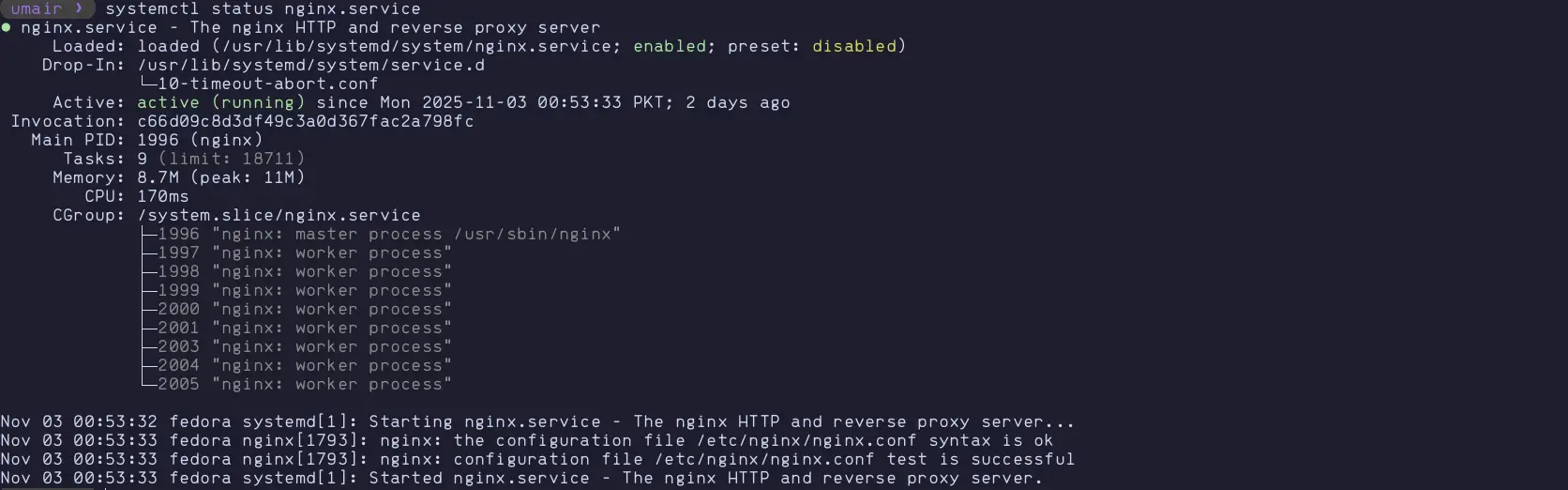

The first time I ran systemctl status nginx.service, I understood what Poettering had been talking about. Instead of a terse message like “nginx is running,” I saw a complete summary including the process ID, uptime, memory usage, recent logs, and the exact command used to start it. It was the kind of insight that had previously required grepping through /var/log/syslog and several ps invocations.

Here is what a typical status output looked like:

It was immediately practical as I could see that the service was running, its exact configuration file path, and its dependencies all in one place.

When a service failed, systemd logged everything automatically. Instead of checking multiple files, I could simply run:

journalctl -u nginx.service -bThat -b flag restricted the logs to the current boot, saving me from wading through old entries. It was efficient in a way the traditional logging setup never was.

Then there was dependency introspection. I could visualize the startup tree with:

systemctl list-dependencies nginx.serviceThis command revealed the entire boot relationship graph, showing what started before and after Nginx. For anyone who had ever debugged slow boots by adding echo statements to init scripts, this was revolutionary.

Over time, I began writing my own unit files. They were simple once you got used to the syntax. I remember converting a small Python daemon I had once managed with a hand-rolled init script. The old version had been about thirty lines of conditional shell logic. The new one was six lines:

[Unit]

Description=Custom Python Daemon

After=network.target

[Service]

ExecStart=/usr/bin/python3 /opt/daemon.py

Restart=always

RestartSec=5

[Install]

WantedBy=multi-user.targetThat was all it took to handle startup order, failure recovery, and clean shutdown without any custom scripting. The first time I watched systemd automatically restart the process after a crash, I felt a mix of admiration and reluctant respect.

Some of my early complaints persisted such as the binary log format of journald still felt unnecessary. I understood why it existed, structured logs allowed richer metadata but it broke the old habit of inspecting logs with less and grep. I eventually learned that you could still pipe output:

journalctl -u myapp.service | grep ERRORSo even that compromise turned out to be tolerable. I also began to appreciate how much time I saved not having to worry about service supervision. Previously, I had used supervisord or custom shell loops to keep processes alive but with systemd, it was built-in. When a process crashed, I could rely on Restart=on-failure or Restart=always. If I needed to ensure that something ran only after a network interface was up, I could just declare:

After=network-online.target

Wants=network-online.targetAlso, one thing that most discussions about systemd missed was built-in service sandboxing. For all the arguments about boot speed and complexity, few people talked about how deeply systemd reshaped security at the service level. The [Service] section of a unit file is not just about start commands, it can define isolation boundaries in a way that old init scripts never could.

Directives like PrivateTmp, ProtectSystem, RestrictAddressFamilies, and NoNewPrivileges can drastically reduce the attack surface of a service. A web server, for instance, can be locked into its own temporary directory with PrivateTmp=true and denied access to the host’s filesystem with ProtectSystem=full. Even if compromised, it cannot modify critical paths or open new network sockets.

Still, I had to get past a subtle psychological barrier as for years, I had believed that understanding the system meant being able to edit its scripts directly and add to it the social pressure. With systemd, much of that transparency moved behind declarative configuration and binary logs. It felt at first like a loss of intimacy but as I learned more about how systemd used cgroups to track processes, I realized it was not hiding complexity but just managing it.

A perfect example came when I started using systemd-nspawn to spin up lightweight containers. The simplicity of systemd-nspawn -D /srv/container was eye-opening as it showed how systemd was not just an init system but a general process manager, capable of running containers, user sessions, and even virtual environments with consistent supervision.

At that point, I began reading the source code and documentation rather than Reddit threads. I discovered how deeply it integrated with Linux kernel features like control groups and namespaces and what had seemed like unnecessary overreach began to look more like a natural evolution of process control.

The resentment faded and in its place came something more complicated, an understanding that my dislike of systemd had been rooted in nostalgia as much as principle but in a modern environment with hundreds of interdependent services, the manual approach simply did not scale though I respect people who shoot at things like building their own AWS alternative.

Systemd was not perfect and especially it was opinionated and sometimes too aggressive in unifying tools. Yet once I accepted it as a framework rather than an ideology, it stopped feeling oppressive and just became another tool, powerful when used wisely, irritating when misunderstood. By then, I had moved from avoidance to proficiency and could write units, debug services, and configure dependencies with ease. I no longer missed the old init scripts as maintenance time became important to me.

Why Systemd Controversy Still Matters

By now, most major distributions have adopted systemd, and the initial outrage has faded into the background. Yet the debate never truly disappeared it just changed form. It became less about startup speed or PID 1 design, and more about philosophy. What kind of control should users have over their systems? How much abstraction is too much?

The systemd debate persists because it touches something deeper than process management which is about the identity of Linux itself. The traditional Unix model prized minimalism and composability, one tool for one job. systemd, by contrast, represents a coordinated platform which integrates logging, device management, service supervision, and even containerization. To people like me, that integration feels awesome to others, it feels like betrayal.

For administrators who grew up writing init scripts and manipulating processes by hand, systemd signaled a loss of transparency which replaced visible shell logic with declarative files and binary logs, and assumed responsibility for things that used to belong to the user but for newer users, especially those managing cloud-scale systems, it offered a coherent framework that actually worked the same everywhere. Though I’m not a huge fan of the word “trade-off” but unfortunately it defines most of modern computing. The more complexity we hide, the less friction we face in day-to-day tasks, but the more we depend on the hidden layer behaving correctly. It is the same tension that runs through all abstraction, from container orchestration to AI frameworks.

Even now, forks and alternatives appear from time to time such as runit, s6, OpenRC each promising a return to simplicity but few large distributions switch back, because the practical benefits of systemd outweigh nostalgia.

Still, I think the discomfort matters as it reminds us that simplicity is not just a technical virtue but a cultural one. The fear of losing control keeps the ecosystem diverse. Projects like Devuan exist not because they expect to overtake Debian, but because they preserve the possibility of a different path.

The real lesson, for me, is not about whether systemd is good or bad. It is about what happens when evolution in open source collides with emotion. Change in infrastructure is not just a matter of better code, it is also a negotiation between habits, values, and trust.

When I type systemctl now, I no longer feel resistance as I just see a tool that grew faster than we were ready for, one that forced a conversation the Linux world was reluctant to have. The controversy still matters because it captures the moment when Linux stopped being a loose federation of ideas and started becoming an operating system in the full sense of the word. That transition was messy, and it probably had to be.

If you have come this far, you likely see that systemd is more than just an init system, it’s a complete automation framework. If you want to explore that side of it, my course Advanced Automation with systemd walks through how to replace fragile cron jobs with powerful, dependency-aware timers, sandboxed tasks, and resource-controlled services. It’s hands-on and practical!