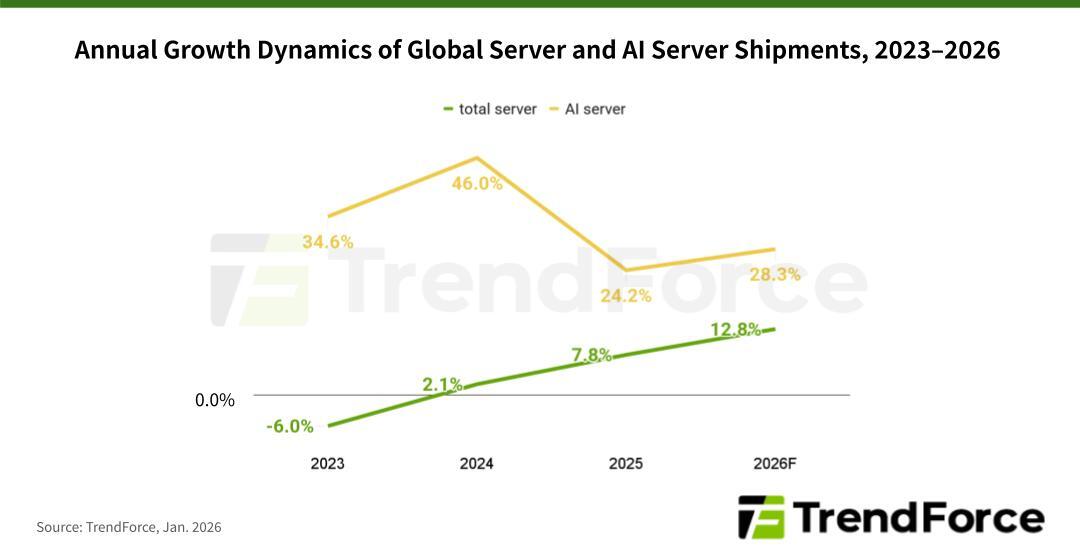

Global shipments of AI servers are set to accelerate sharply in 2026, driven by rising inference workloads, renewed cloud investment cycles, and the growing adoption of custom silicon. According to new market research by TrendForce, worldwide AI server shipments are forecast to grow by more than 28% year over year, significantly outpacing the broader server market, which is expected to expand by 12.8% during the same period.

The outlook reflects a structural shift in how cloud service providers deploy AI infrastructure and monetize artificial intelligence at scale.

The forecast comes as North American hyperscalers continue to pour capital into AI-focused data center expansion. TrendForce attributes much of the momentum to cloud service providers intensifying investments to support inference-heavy services such as AI copilots, autonomous agents, and generative AI platforms now entering mass adoption. While earlier cycles emphasized training large language models using GPU-dense systems, the market is now tilting toward inference, which relies heavily on a mix of AI accelerators and general-purpose servers.

– story continues below the image –

Shifting From Training to Inference

Between 2024 and early 2025, server demand was largely driven by AI training workloads built around GPUs paired with high-bandwidth memory for parallel processing. That focus has begun to evolve. Since the second half of 2025, cloud providers have increasingly prioritized inference as a commercial use case, deploying AI agents, LLaMA-derived applications, and upgraded productivity assistants to end users at scale. These inference pipelines often span both dedicated AI servers and conventional servers that handle orchestration, storage, and pre- and post-processing tasks.

TrendForce notes that this transition is directly influencing procurement strategies. Google and Microsoft, in particular, are expected to ramp up purchases of general-purpose servers to handle the enormous daily inference traffic generated by services such as Gemini and Copilot. At the same time, older infrastructure acquired during the 2019–2021 cloud investment boom is reaching replacement age, further fueling shipment growth.

Capital expenditure across the top five North American cloud providers – Google, AWS, Meta, Microsoft, and Oracle – is projected to rise by roughly 40% year over year in 2026. This spending will be split between new AI infrastructure, replacement of aging assets, and the expansion of regional and sovereign cloud deployments, especially in regulated markets.

On the technology side, GPUs will remain dominant, accounting for nearly 70% of AI server shipments. Systems based on NVIDIA’s GB300 platform are expected to lead volumes, while newer VR200-based designs gain traction later in the year. However, the fastest growth is expected in ASIC-based AI servers, which are forecast to represent nearly 28% of shipments in 2026 – the highest share since 2023.

Google and Meta are at the forefront of this shift, expanding the use of in-house ASICs to improve efficiency and control costs. Google’s Tensor Processing Units, originally designed for internal workloads, are increasingly being offered to external customers through Google Cloud, including AI startups such as Anthropic. TrendForce expects shipment growth of ASIC-based AI servers to outpace that of GPU-based systems as custom silicon strategies mature.

Executive Insights FAQ

Why are AI server shipments growing faster than overall server shipments?

AI inference services are scaling rapidly, requiring specialized hardware and additional general-purpose servers to support end-to-end workloads.

What is driving the renewed demand for general-purpose servers?

Inference pipelines rely on conventional servers for orchestration, storage, and data processing, in addition to accelerator-based systems.

Why are ASIC-based AI servers gaining market share?

Custom ASICs offer better efficiency and cost control for large-scale, predictable workloads, making them attractive to hyperscalers.

Which regions are contributing most to AI server growth?

North America remains the primary driver, supported by sovereign cloud projects and edge AI deployments globally.

Will GPUs remain dominant despite ASIC growth?

Yes, GPUs will still account for the majority of shipments, but their relative share will gradually decline as ASIC adoption increases.