11 Best Web Hosting India 2026, Jan 🇮🇳[Updated List]

New to Web Hosting? Have no idea what to do if you want your Indian Website…

![11 Best Web Hosting India 2026, Jan 🇮🇳[Updated List]](https://wiredgorilla.com/wp-content/uploads/2026/01/11-best-web-hosting-india-2026-jan-f09f87aef09f87b3updated-list-768x760.webp)

New to Web Hosting? Have no idea what to do if you want your Indian Website…

Do you own a business or have a blog? If yes, then you might know that…

Global cloud and AI service providers are under mounting pressure to redesign their infrastructure as the…

The year 2025 was happening for Linux. From Rust making inroads in the kernel to AI…

Picking a hosting solution that is the right one for your plans is the prime (and…

Hey…just try Twingate….you’ll never look at VPN the same: https://ntck.co/twingate-networkchuck I built another AI supercomputer with…

AWS has unveiled Graviton5, its most advanced generation of custom-built Arm-based processors, marking a significant escalation…

The GNOME app ecosystem is on fire these days. Whatever your needs, there’s probably an app…

The 2025 United Nations Climate Change Conference (sometimes referred to as COP30) is taking place in…

The blazing fast speed and highest performance of your websites have always been at the top…

Website performance is critical to your online success. Faster page loads result in better conversion rates,…

Oracle Cloud Infrastructure (OCI) is extending its partnership with chipmaker Ampere by announcing the upcoming launch…

Over the past five years, private-sector funding for fusion energy has exploded. The total invested is…

Craving to get readers from Europe to visit your website and experience zero latency when browsing…

The OpenStack community has announced the release of OpenStack 2025.2, also known as Flamingo, marking the…

Quantum entanglement — once dismissed by Albert Einstein as “spooky action at a distance” — has…

Minecraft is a game where you get a gaming experience of fantastic adventure with limitless possibilities…

Are you running a website or an online store and finding it hard to get it…

When you talk about web hosting, BlueHost has been a popular name for many bloggers. However,…

Novacore Innovations, headquartered in Mumbai, has announced the deployment of its GPU cloud platform powered by…

The new system, known as RAMSES (Research Accelerator for Modeling and Simulation with Enhanced Security), replaces…

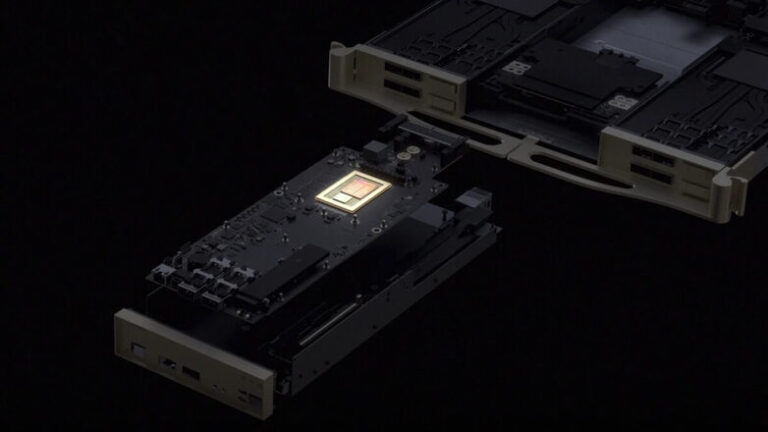

NVIDIA is bringing its latest RTX PRO 6000 Blackwell Server Edition GPU to the mainstream enterprise…

The day before my thesis examination, my friend and radio astronomer Joe Callingham showed me an…

![Top 7 Best Web Hosting in Netherlands 2025 ð³ð±[Reviewed]](https://wiredgorilla.com/wp-content/plugins/wp-fastest-cache-premium/pro/images/blank.gif)

Wanting European Audience to come across your website so they may browse its information without experiencing…